Suggesting Natural Method Names to Check Name Consistencies

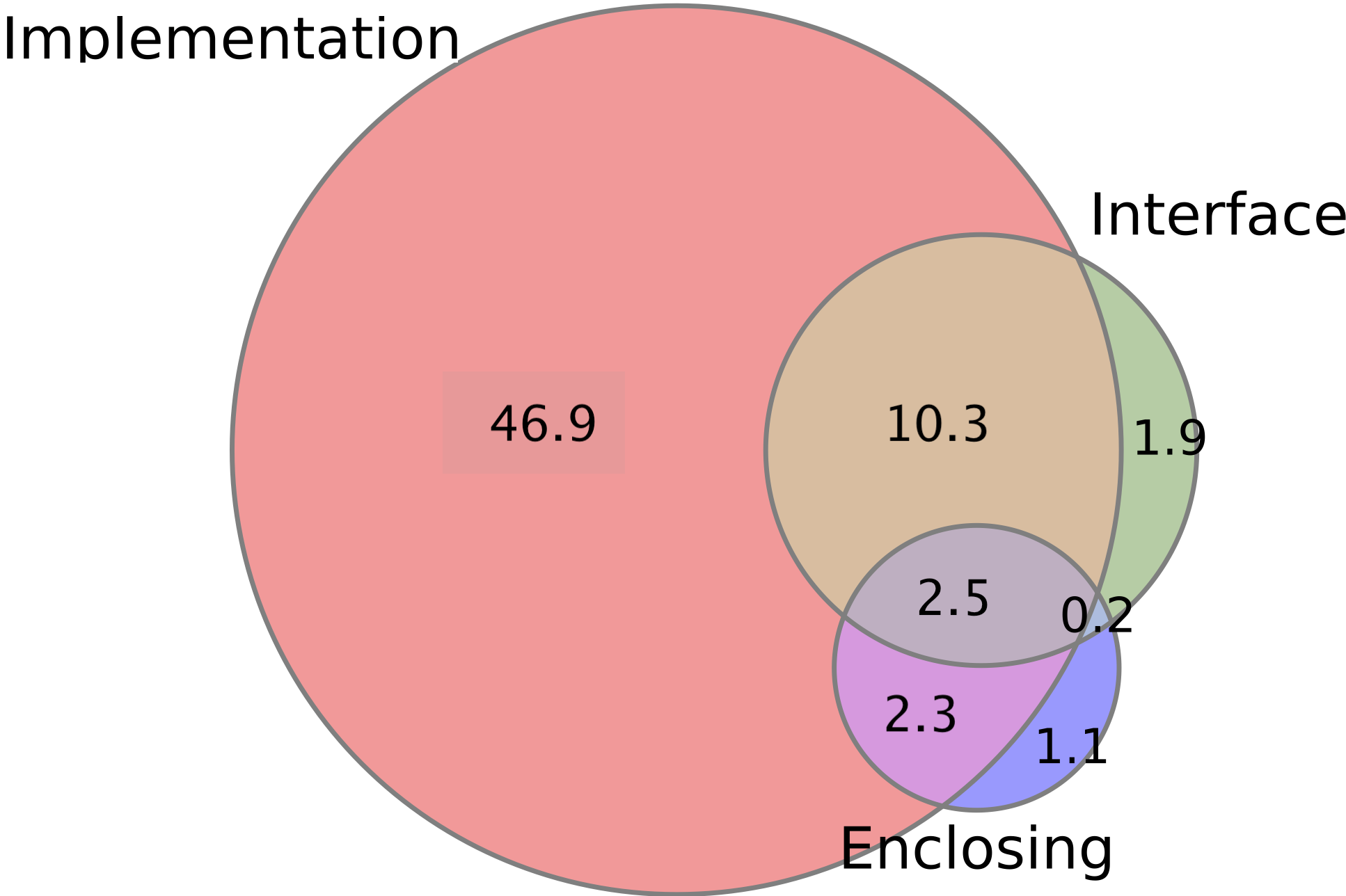

Misleading names of the methods in a project or the APIs in a software library confuse developers about program functionality and API usages, leading to API misuses and defects. In this paper, we introduce MNire, a machine learning approach to check the consistency between the name of a given method and its implementation. MNire first generates a candidate name and compares the current name against it. If the two names are sufficiently similar, we consider the method as consistent. To generate the method name, we draw our ideas and intuition from an empirical study on the nature of method names in a large dataset. Our key findings are that high proportions of the tokens of method names can be found in the three contexts of a given method including its body, the interface (the method's parameter types and return type), and the enclosing class' name. Even when such tokens are not there, MNire uses the contexts to predict the tokens due to the high co-occurrence likelihoods. Our unique idea is to treat the name generation as an abstract summarization on the tokens collected from the names of the program entities in the three above contexts.

We conducted several experiments to evaluate MNire in method name consistency checking and in method name recommending on large datasets with +14M methods. In detecting inconsistency method names, MNire improves the state-of-the-art approach by 10.4% and 11% relatively in recall and precision, respectively. In method name recommendation, MNire improves relatively over the state-of-the-art technique, code2vec, in both recall 18.2% higher and precision (11.1% higher). To assess MNire's usefulness, we used it to detect inconsistent methods and suggest new names in several active, GitHub projects. We made 50 pull requests and received 42 responses. Among them, 5 PRs were merged into the main branch, and 13 were approved for later merging. In total, in 31/42 cases, the developer teams agree that our suggested names are more meaningful than the current names, showing MNire's usefulness.

Exploratory Study

Uniqueness of Method Names

62.9% of the full method names are unique. For a given method, one cannot rely on searching for a good name in the data of the previously seen method names.

78.1% of the tokens in method names can be found in the other previously seen method names.

| Method name | Token | |

|---|---|---|

| Mean #occcurrence | 4.8 | 400.3 |

| Median #occcurrence | 1 | 3 |

| #occcurrence = 1 | 62.9% | 21.9% |

| #occcurrence > 1 | 37.1% | 78.1% |

Common tokens shared between a method name and the contexts

There are high proportions of the tokens of method names which are shared with the three contexts. There are high percentages of the methods whose names share with the names of the entities in the contexts.

The conditional occurrences of tokens in method names on the contexts

When encountering all the tokens of the name of the program entities used in the body of a method, in 35.9% of the cases, we could see a token in the method’s name.

Even the tokens are not found in the contexts, one could use the contexts to predict the tokens in the method names due to those high conditional probabilities.

Each of the contexts could be used to provide the indication of the occurrences of the tokens in the good names more than those in the inconsistent names.

Accuracy Comparison

MNire outperformed the state-of-the-art approaches in both consistency checking and name recommending

43.1% of the method names suggested exactly match with the oracle (while only 37.2% of the method names occurring more than once)

13.1% of the generated names that are not previously seen in the training data

| Liu et al | MNire | ||

|---|---|---|---|

| IC | Precision | 56.8 | 62.7 |

| Recall | 84.5 | 93.6 | |

| F-score | 67.9 | 75.1 | |

| C | Precision | 51.4 | 56.0 |

| Recall | 72.2 | 84.2 | |

| F-score | 60.0 | 67.3 | |

| Accuracy | 60.9 | 68.9 | |

| code2vec | MNire | |

|---|---|---|

| Precision | 63.1 | 70.1 |

| Recall | 54.4 | 64.3 |

| F-score | 58.4 | 67.1 |

| Exact Match | - | 43.1 |

Study on Accuracy by the Sizes of Methods

Context Analysis Evaluation Results

| IMP | IMP+INF | IMP+ENC | IMP+INF+ENC =MNire |

||

|---|---|---|---|---|---|

| IC | Precision | 60.2 | 61.7 | 61.0 | 62.7 |

| Recall | 90.0 | 92.1 | 91.3 | 93.6 | |

| F-score | 72.1 | 73.9 | 73.1 | 75.1 | |

| C | Precision | 53.2 | 55.1 | 54.1 | 56.0 |

| Recall | 79.3 | 82.3 | 80.6 | 84.2 | |

| F-score | 63.7 | 66.0 | 64.7 | 67.3 | |

| Accuracy | 62.1 | 65.2 | 64.2 | 68.9 | |

| IMP | IMP+INF | IMP+ENC | IMP+INF+ENC =MNire |

|

|---|---|---|---|---|

| Precision | 49.7 | 63.2 | 54.4 | 66.4 |

| Recall | 43.3 | 57.8 | 48.9 | 61.1 |

| F-score | 46.3 | 60.4 | 51.5 | 63.6 |

| Exact match | 20.2 | 34.7 | 25.7 | 43.1 |

Sensitivity Results

Accuracy with Different Representations

| Lexeme | AST | Graph | MNire | ||

|---|---|---|---|---|---|

| IC | Precision | 59.0 | 57.2 | 55.3 | 62.7 |

| Recall | 88.3 | 85.6 | 80.3 | 93.6 | |

| F-score | 70.7 | 68.6 | 65.5 | 75.1 | |

| C | Precision | 47.1 | 46.2 | 45.8 | 56.0 |

| Recall | 78.2 | 73.5 | 72.1 | 84.2 | |

| F-score | 58.8 | 56.8 | 56.0 | 67.3 | |

| Accuracy | 52.0 | 51.1 | 50.5 | 68.9 | |

| Lexeme | AST | Graph | MNire | |

|---|---|---|---|---|

| Precision | 29.5 | 23.1 | 16.2 | 50.6 |

| Recall | 25.1 | 29.2 | 30.3 | 45.1 |

| F-score | 27.1 | 25.9 | 21.1 | 47.7 |

| Exact Match | 9.1 | 8.1 | 4.7 | 22.1 |

This result suggests that the naturalness of names is more important to the problem of method name suggestion. While structures and dependencies are important for code execution, to suggest a method name, which is the abstract of entire method, using tokensof the names in the contexts as in MNire yields better performance.

Impact of Contexts' Size and Lengths of Tokens in Contexts on Accuracy

| 1-10 tokens | 10-20 tokens | 20-30 tokens | +30 tokens | ||

|---|---|---|---|---|---|

| F-score | 35.9 | 41.1 | 43.2 | 51.0 |

| 0-80% | 80-90% | 90-95% | +95% | ||

|---|---|---|---|---|---|

| F-score | 37.0 | 39.4 | 42.5 | 48.5 |

Impact of Training Data’s Size on Accuracy

| 1.0M | 1.25M | 1.5M | 1.75M | 2.0M | ||

|---|---|---|---|---|---|---|

| IC | Precision | 59.6 | 60.1 | 60.3 | 61.5 | 62.7 |

| Recall | 92.2 | 92.5 | 93.2 | 93.4 | 93.6 | |

| F-score | 72.4 | 72.9 | 73.2 | 74.2 | 75.1 | |

| C | Precision | 52.7 | 53.5 | 54.4 | 55.2 | 56.0 |

| Recall | 81.4 | 81.9 | 82.6 | 83.5 | 84.2 | |

| F-score | 63.9 | 64.7 | 65.6 | 66.5 | 67.3 | |

| Accuracy | 62.6 | 63.8 | 65.8 | 67.3 | 68.9 | |

| 1.0K | 2.5K | 5.0K | 7.5K | 10.0K | |

|---|---|---|---|---|---|

| Precision | 41.1 | 56.2 | 63.1 | 64.9 | 66.4 |

| Recall | 47.8 | 53.7 | 57.6 | 59.5 | 61.1 |

| F-score | 44.2 | 54.9 | 60.2 | 62.0 | 63.6 |

| Exact Match | 19.9 | 29.4 | 34.8 | 26.9 | 38.2 |

Impact of Threshold for consistency checking

Datasets

| Test data | Train data | |

|---|---|---|

| #Methods | 2,700 | 1,962,872 |

| #Files | - | 250,972 |

| #Projects | - | 430 |

| #Unique method names | - | 540,237 |

| #occurence > 1 | - | 33.5% |

| Test data | Train data | Total | |

|---|---|---|---|

| Comparison Experiment with code2vec (Download) | |||

| #Files | 61,641 | 1,746,272 | 1,807,913 |

| #Methods | 458,800 | 14,000,028 | 14,458,828 |

| Experiments for RQ4, RQ5, and RQ6 (Download) | |||

| #Projects | 450 | 9,772 | 10,222 |

| #Files | 51,631 | 1,756,282 | 1,807,913 |

| #Methods | 466,800 | 13,992,028 | 14,458,828 |

| Live Study on Real Developers (Download) | |||

| #Projects | 100 | - | 100 |

| #Files | 18,980 | - | 18,980 |

| #Methods | 139,827 | - | 139,827 |